Partial Differential Equations on Graphs

This lecture was first created by Leon Bungert for Masters students in the winter semester 2024-2025. In the next winter semester, I inherited from this lecture, and added some material to it. The lecture is paired up with practical sessions, involving both coding and theory exercises. A significant part of the lecture is based on this CalVar course by Jeff Calder. Please click here for the lecture notes.

Overview of the course

Graph-based learning

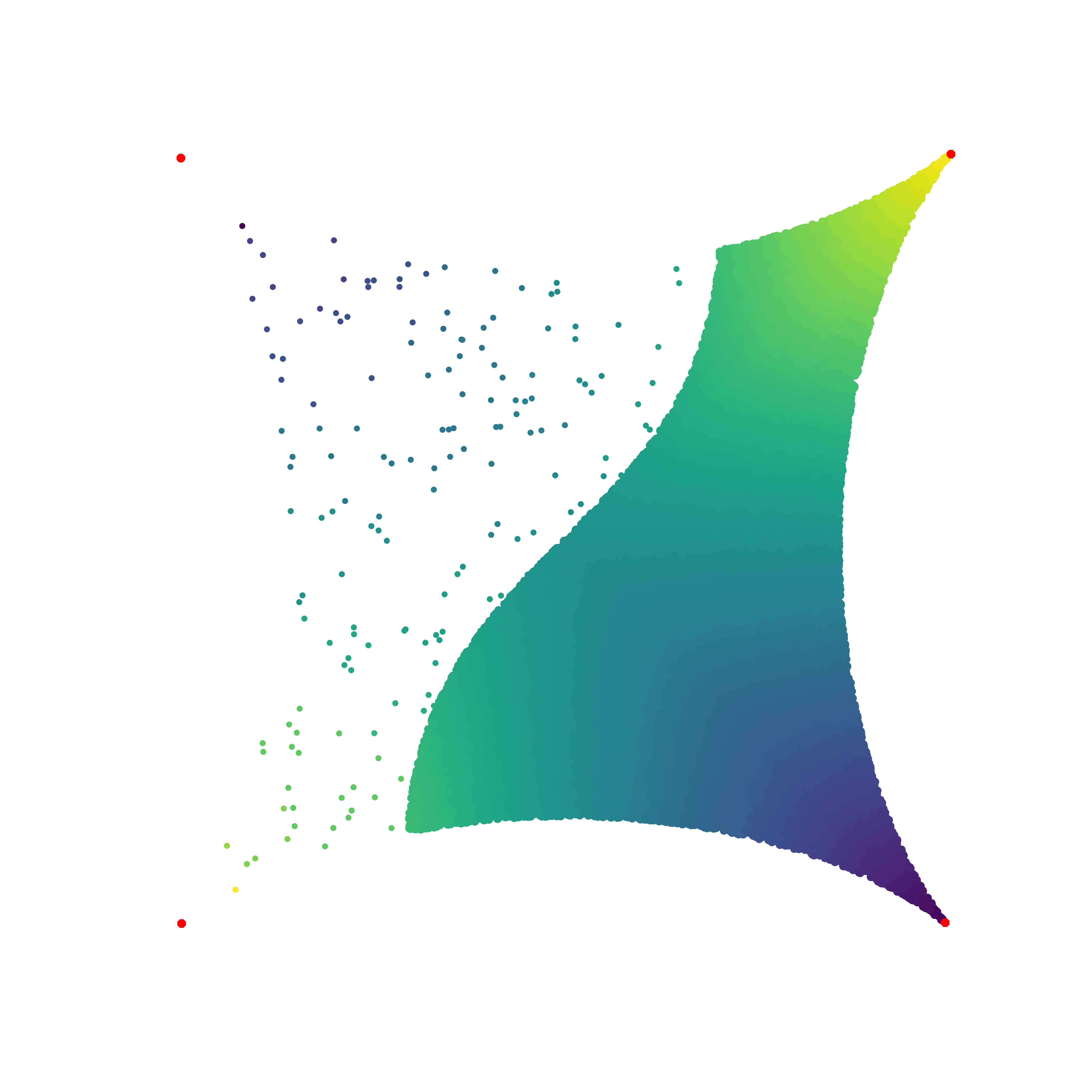

In a first part, we introduce the concept of random graph, focusing on $k$-NN and $\epsilon$-ball graphs, that naturally arises in the context of machine learning. We show a first result showing that in the large data limit, an $\epsilon$-ball graph is almost surelmy connected.

We then introduce, for a graph with weight matrix $W$ and degree matrix $D$, the Graph Laplacian $$ L := D-W. $$ This object is the central object that will be studied in the lecture. We study the elementary spectral properties of $L$, and show the Cheeger inequality for graphs, which reads $$ \frac{\lambda_2(G)}{2} \leq \text{Cheeg}(G) \leq \sqrt{2 \lambda_2(G)}. $$ This motivates the fact that spectral clustering is a reasonable approximation of the normalized cut problem.

We then review other well-known graph-based algorithms, like PageRank, t-SNE and Semi-Supervised Learning (SSL). When possible, we try to give different perspectives on each problem, either one from PDE or calculus of variation or random walks.

Discrete to continuum

A currently very active area of research is concerned with the following question: what happens in the large data limit ? In many contexts, we can show that the Graph Laplacian converges (in some sense) toward a weighted Laplace operator $$ \Delta_\rho u := \rho^{-1} \text{div}\left( \rho^2 u\right) $$ where $\rho$ is the distribution of the data. This kind of result can be proven in two different ways:

- Uniform convergence: Using concentration inequalities (in our case, Bernstein's) and Taylor expansion, we can show that the Graph Laplacian is a consistent approximation of the weighted Laplacian. Using the maximum principle, we can show convergence of the solutions;

- $\Gamma$-convergence: By leveraging tools from calculus of variations and Sobolev spaces, we can show that the Dirichlet energy of the graph Laplacian $\Gamma$-converges toward its continous counterpart.