In Machine Learning, graph-based techniques can succesfully deal with tasks like semi-supervised learning or clustering of complex datasets. In the recent years, researchers managed to explain the successes and shortcomings of these approaches by showing that in the limit of infinite data, the graph laplacian actually converges to a weighted laplace operator. Using these results, we can use the graph laplacian as a numerical PDE solver.

The Graph Laplacian

Let $\Omega\subset \mathbb{R}^d$ and $\mu$ be a probability distribution supported on $\Omega$ with a positive continuous density $\rho $. Suppose that $V^{(n)} = \{x_1,\dots,x_n\}$ are i.i.d. samples of $\mu$ and let $\eta:(0,\infty)\to [0,\infty)$ be a compactly supported and non-increasing kernel.

We define the weight matrix $W^{(n)}$ as \begin{equation*} W_{ij}^{(n)} = \frac{2}{n\sigma_\eta\varepsilon_n^{d+2}} \eta\left( \frac{|x_i - x_j|}{\varepsilon_n} \right), \qquad i,j=1,\dots,n,\,i\neq j, \end{equation*} where $\varepsilon_n>0$ is a scaling parameter and $\sigma_\eta>0$ is a constant depending only on $\eta$ and $d$.

The graph Laplacian $L^{(n)}$ is then defined as $$ L^{(n)} := D^{(n)} - W^{(n)} $$ As a positive semi-definite symmetric matrix, the graph Laplacian has eigenvalues $$ 0 = \lambda_0^{(n)} \leq \lambda_1^{(n)} \leq \dots \leq \lambda_{n-1}^{(n)}. $$

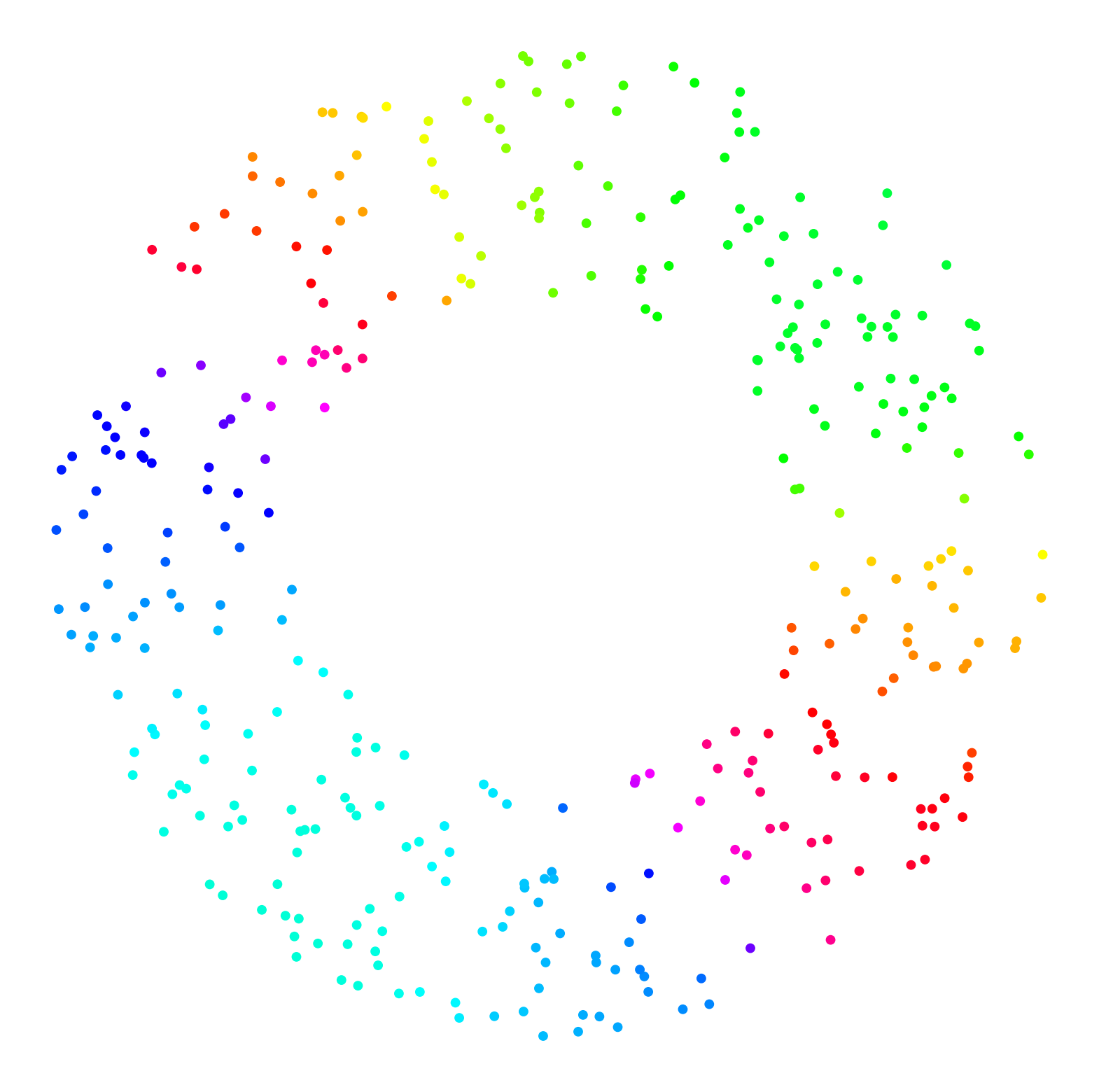

In the following figure, you can see the set of vertices, the graph built on it and the eigenfunction associated to $\lambda_1^{(n)}$:

In the context of spectral clustering, the eigenvectors associated to the Graph Laplacian are used to embbed the vertices of the graph into a space where the different clusters are easier to distinguish. Contrary to more straightforward techniques like $k$-means, spectral clustering is able to deal with dataset with complex geometries, as it is illustrated here.

Continuum limit

Now, let's imagine that the number of data samples goes to infinity. What is the limit, if it exists, of the eigenvalues of $L^{(n)}$ ?

This question was first studied in [1]. Later, researchers managed to give complete proof of convergence along with rates (see for instance [2]). Formally, the results can be stated the following way:

Hence, for $n$ large enough, the graph Laplacian approximate the previous weighted Laplacian and hence can be used as a numerical solver for this equation. In particular, when taking a constant $\rho$, we actually compute the usual Neumann eigenvalue problem $$ \begin{cases} -\Delta u = \mu_k(\Omega) u &\mbox { in } \Omega,\\ \frac{\partial u}{\partial n} = 0 &\mbox { on } \partial \Omega. \end{cases} $$

Coupling this graph Laplacian approach with a network-based level set approach, we managed to provide a fully meshless shape optimization method.

Note that the graph laplacian do not only allow to solve Neumann eigenvalue problems; it can for instance be used to compute approximate solutions to the Poisson equation, as is shown in the companion article.

Companion articles :

-

Meshless Shape Optimization using Neural Networks and Partial Differential Equations on Graphs

(2025),

with L. BUNGERT ,

Scale Space and Variational Methods in Computer Vision.

References :

-

Consistency of spectral clustering

(2008),

U. VON LUXBURG, M. BELKIN and O. BOUSQUET,

The Annals of Statistics. -

Error estimates for spectral convergence of the graph Laplacian on random geometric graphs towards the Laplace-Beltrami operator

(2018),

N. GARCIA TRILLOS, M. GERLACH and D. SLEPCEV,

Foundations of Computational Mathematics.